Hello all,

much has happened since the last version of Mitsuba, so I figured it’s time for a new official release! I’m happy that were quite a few external contributions this time.

The new features of version 0.5.0 are:

-

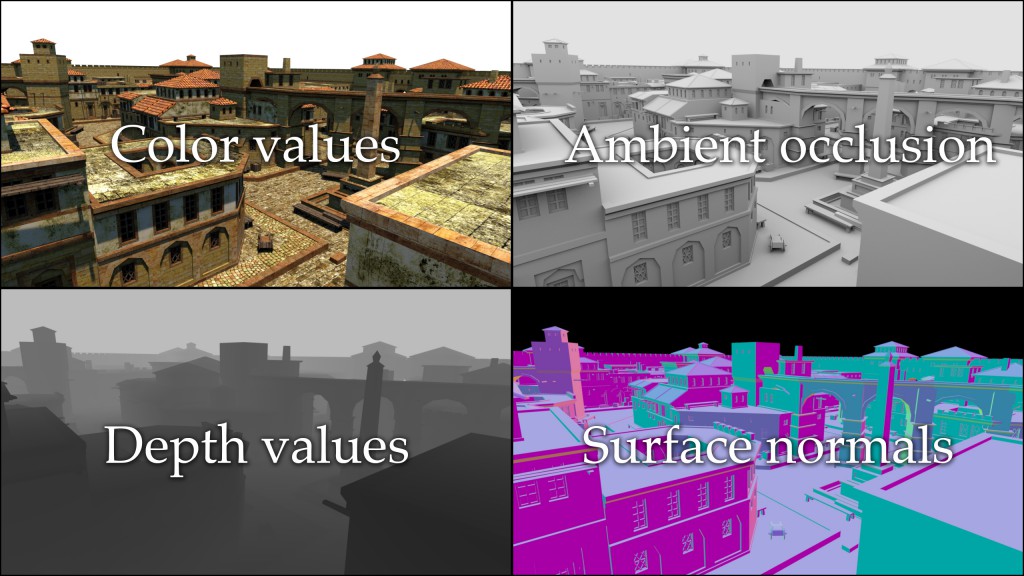

Multichannel renderings:

Mitsuba can now perform renderings of images with multiple channels—these can contain the result of traditional rendering algorithms or extracted information of visible surfaces (e.g. surface normals or depth). All computation happens in one pass, and the output is written to a dense or tiled multi-channel EXR file. This feature should be quite useful to computer vision researchers who often need synthetic ground truth data to test their algorithms. Refer to the multichannel plugin in the documentation for an example.

-

Python integration: Following in the footsteps of previous versions, this release contains many improvements to the Python language bindings. They are now suitable for building quite complex Python-based applications on top of Mitsuba, ranging from advanced scripted rendering workflows to full-blown visual material editors. The Python chapter of the documentation has been updated with many new recipes that show how to harness this functionality. The new features include

-

PyQt/PySide integration: It is now possible to fully control a rendering process and display partial results using an user interface written in Python. I’m really excited about this feature myself because it will free me from having to write project-specific user interfaces using C++ in the future. With the help of Python, it’s simple and fast to whip up custom GUIs to control certain aspects of a rendering (e.g. material parameters).

The documentation includes a short example that instantiates and renders a scene while visually showing the progress and partial blocks being rendered. Due to a very helpful feature of Python called buffer objects, it was possible to implement communication between Mitsuba and Qt in such a way that the user interface directly accesses the image data in Mitsuba’s internal memory without the overhead of costly copy operations.

-

Scripted triangle mesh construction: The internal representation of triangle shapes can now be accessed and modified via the Python API—the documentation contains a recipe that shows how to instantiate a simple mesh. Note that this is potentially quite slow when creating big meshes due to the interpreted nature of Python.

-

Blender Python integration: An unfortunate aspect of Python-based rendering plugins for Blender is the poor performance when exporting geometry (this is related to the last point). It’s simply not a good idea to run interpreted code that might have to iterate over millions of vertices and triangles. Mitsuba 0.5.0 also lays the groundwork for future rendering plugin improvements using two new features: after loading the Mitsuba Python bindings into the Blender process, Mitsuba can directly access Blender’s internal geometry data structures without having to go through the interpreter. Secondly, a rendered image can be passed straight to Blender without having to write an intermediate image file to disk. I look forward to see these features integrated into the MtsBlend plugin, where I expect that they will improve the performance noticeably.

-

NumPy integration: Nowadays, many people use NumPy and SciPy to process their scientific data. I’ve added initial support to facilitate NumPy computations involving image data. In particular, Mitsuba bitmaps can now be accessed as if they were NumPy arrays when the Mitsuba Python bindings are loaded into the interpreter.

For those who prefer to run Mitsuba as an external process but still want to use NumPy for data processing, Joe Kider contributed a new feature for the mfilm plugin to write out binary NumPy files. These are much more compact and faster to load compared to the standard ASCII output of mfilm.

-

Python 3.3 is now consistently supported on all platforms

-

On OSX, the Mitsuba Python bindings can now also be used with non-Apple Python binaries (previously, doing so would result in segmentation faults).

-

- GUI Improvements:

-

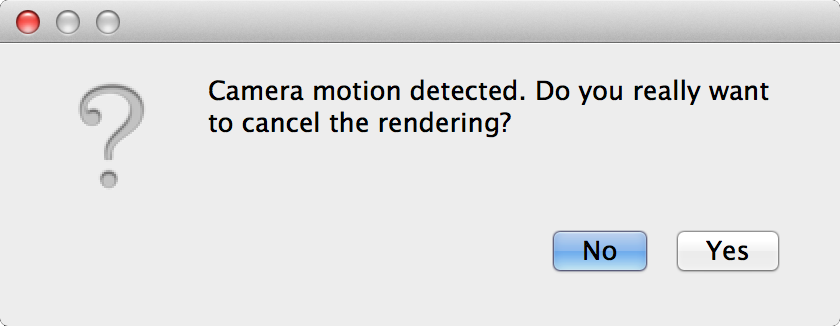

Termination of rendering jobs: This has probably happened to every seasoned user of Mitsuba at some point: an accidental click/drag into the window stops a long-running rendering job, destroying all progress made so far. The renderer now asks for confirmation if the job has been running for more than a few seconds.

-

Switching between tabs: The Alt-Left and Alt-Right have been set up to cycle through the open tabs for convenient visual comparisons between images.

-

Fewer clicks in the render settings: Anton Kaplanyan contributed a patch that makes all render settings fields directly editable without having to double click on them, saving a lot of unnecessary clicks.

-

-

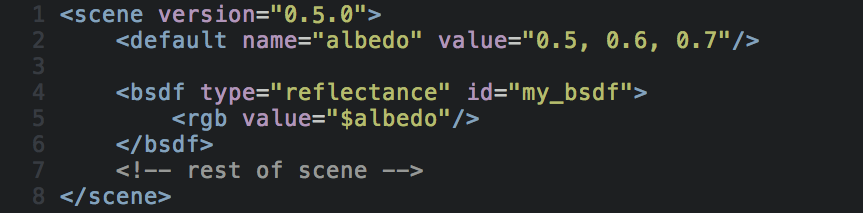

New default tag: One feature that has been available in Mitsuba since the early days was the ability to leave some scene parameters unspecified in the XML description and supply them via the command line (e.g. the albedo parameter in the following snippet). This is convenient but has always had the critical drawback that loading fails with an error message when the parameter is not explicitly specified. Mitsuba 0.5.0 adds a new XML tag named default that denotes a fallback value when the parameter is not given on the command line. The following example illustrates how to use it:

-

Windows 8.x compatibility: In a prior blog post, I complained about running into serious OpenGL problems on Windows 8. I have to apologize since it looks like I was the one to blame: Microsoft’s implementation is fine, and it was in fact a bug in my own code that was causing the issues. With that addressed in version 0.5.0, Mitsuba now also works on Windows 8.

-

CMake build system: The last release shipped with an all-new set of dependency libraries, and since then the CMake build system was broken to the point of being completely unusable. Edgar Velázquez-Armendáriz tracked down all issues and submitted a big set of patches that make CMake builds functional again.

-

Other bugfixes and improvements: Edgar Velázquez-Armendáriz fixed an issue in the initialization code that could lead to crashes when starting Mitsuba on Windows

The command line server executable mtssrv was inconvenient to use on Mac OS X because it terminated after any kind of error instead of handling it gracefully. The behavior was changed to match the other platforms.

A previous release contained a fix for an issue in the thin dielectric material model. Unfortunately, I did not apply the correction to all affected parts of the plugin back then. I’ve since then fixed this and also compared the model against explicitly path traced layers to ensure a correct implementation.

Anton Kaplanyan contributed several MLT-related robustness improvements.

Anton also contributed a patch that resets all statistics counters before starting a new rendering, which is useful when batch processing several scenes or when using the user interface.

Jens Olsson reported some pitfalls in the XML scene description language that could lead to inconsistencies between renderings done in RGB and spectral mode. To address this, the behavior of the intent attribute and spectrum tag (for constant spectra) was slightly adapted. This only affects users doing spectral renderings, in which case, you may want to take a look at Section 6.1.3 of the new documentation and the associated entry on the bug tracker.

I’d also like to announce two new efforts to develop plugins that integrate Mitsuba into modeling applications:

-

Rhino plugin: TDM Solutions SL published the first version of an open source Mitsuba plugin for Rhino 3D and Rhino Gold. The repository of this new plugin can be found on GitHub, and there is also a Rhino 3D group page with binaries and documentation. It is based on an exporter I wrote a long time ago but adds a complete user interface with preliminary material support. I’m excited to see where this will go!

-

Maya plugin: Jens Olsson from the Volvo Car Corporation contributed the beginnings of a new Mitsuba integration plugin for Maya. Currently, the plugin exports geometry to Mitsuba’s .serialized format but still requires manual XML input to specify materials. Nonetheless, this should be quite helpful for Mitsuba users who model using Maya. The source code is located in the mitsuba-maya repository and prebuilt binaries are here.

Getting the new version

Precompiled binaries for many different operating systems are available on the download page. The updated documentation file (249 pages) with coverage of all new features is here (~36 MB), and a lower resolution version is also available here (~6MB).

By the way: a little birdie told me that Mitsuba has been used in a bunch SIGGRAPH submissions this year. If all goes well, you can look forward to some truly exciting new features!

Hi!

Congrats on the new release! It looks great! I love the move for better integration with different applications.

A tight integration with blender would be outstanding.

Your work is very impressive.

Absolutely terrific! Thanks Wenzel!

Wonderful! I was waiting for it!

Congrats and thank you!

Ditto on Blender.

Well, it looks like Softimage is going to be discontinued. So, I’ll switch my efforts to the Blender plugin.

Is it really going to be discontinued or is it just t rumor? Can you share the source?

Thanks and really appreciated!

Awesome Update!

MultiChannel rendering is cool!

And,I create sketchup plugin for exporter to Mitsuba Render.

It was uploaded in sketchup user community site.

http://sketchucation.com/forums/viewtopic.php?f=323&t=56460#p512606

Wow, that looks super-promising — thank you. If you’re interested in hosting this on the Mitsuba website with a Mercurial or GIT repository [*], please get in touch with me:

[*] https://www.mitsuba-renderer.org/repos/exporters

Wow!

Thanks for your invitation!

I interested gists well.

But I do not understand well about remote repository.

And I can write only ruby script.

So I will study well before enter there.

Thanks!

それでは、準備が出来たら知らせてくださいね。

depth of field is noisy.

Is this correct?

left: erpt

right: erpt + DOF

http://uploda.cc/img/img531e8ec4a94b2.jpg

Hi,

I don’t think that any of the perturbations used in ERPT changes the position on the aperture, hence the noisy DOF. You could increase the “average number of chains” and “samples per pixel” parameters.

Wenzel

I rendered again by increase them.

http://uploda.cc/img/img5323d0f6b8ccd.jpg

I think as there is no change in noise.

Tanks.

Maybe you can create a ticket on the bugtracker? It’s hard for me to say what is going on without being able to load the scene.

I created it.

https://www.mitsuba-renderer.org/tracker/issues/256

Thanks.

any plans for create exporter or integration for 3ds max?

Not that I know of — let me know if you’re interested in working on this.

ok

Interesting but it would be useful to have a step by step guide to install this program in my win 7 system. Not got anywhere so far using intuition.

No installation is needed — just run mtsgui.exe and load&render scenes. Some instruction videos are linked from the documentation (or see my Vimeo account).

Hello,

I’m trying to run a python example for Py/Qt integration from the Mitsuba documentation (on part 13.2.11) with no success. It seem to have an error between “NativeBuffer” and the expected type “sip.voidptr” for the instansiation of the class QImage (line 66 for me)

Do you have the same error ?

Thanks,

Can you provide a full error message? And perhaps create a bug report on the tracker? Thanks–wenzel

Hello,

The error message is :

2014-05-22 14:55:50 WARN wrk0 [core.cpp:54] Caught a Python exception: Traceback (most recent call last):

File “mitsuba-test.py”, line 42, in workBeginEvent

_ = self._get_film_ensure_initialized(job)

File “mitsuba-test.py”, line 67, in _get_film_ensure_initialized

self.qimage = QImage((self.bitmap.getNativeBuffer()), self.size.x, self.size.y, QImage.Format_RGB888)

TypeError: arguments did not match any overloaded call:

QImage(): too many arguments

QImage(QSize, QImage.Format): argument 1 has unexpected type ‘NativeBuffer’

QImage(int, int, QImage.Format): argument 1 has unexpected type ‘NativeBuffer’

QImage(str, int, int, QImage.Format): argument 1 has unexpected type ‘NativeBuffer’

QImage(sip.voidptr, int, int, QImage.Format): argument 1 has unexpected type ‘NativeBuffer’

QImage(str, int, int, int, QImage.Format): argument 1 has unexpected type ‘NativeBuffer’

QImage(sip.voidptr, int, int, int, QImage.Format): argument 1 has unexpected type ‘NativeBuffer’

QImage(list-of-str): argument 1 has unexpected type ‘NativeBuffer’

QImage(QString, str format=None): argument 1 has unexpected type ‘NativeBuffer’

QImage(QImage): argument 1 has unexpected type ‘NativeBuffer’

QImage(QVariant): too many arguments

And during the execution :

Traceback (most recent call last):

File “mitsuba-test.py”, line 89, in _handle_update

if image.width() > self.width() or image.height() > self.height():

AttributeError: ‘NoneType’ object has no attribute ‘width’

Thanks

Guillaume

Hi Guillaume,

please create a ticket on the tracker — the blog comments aren’t really a good place to discuss such issues. That said, just searching for “sip.voidptr” lists some ways of converting Python objects into them: http://nullege.com/codes/search/sip.voidptr

Not sure if that’s the right approach though..

Wenzel

Really good work; I’m really impressed with the project and have been using it for a few months now on my Linux system. The only issue that I found is that there appears to be a few large dark splotches that show up on the model while using the dipole bsdf … but I haven’t narrowed this down yet to actually being Mitsuba’s fault – it could be something in the model causing it or a setting that I’m getting wrong. Are there any plans to update the python extensions so they work in python 3?

It should work in Python 3 just fine. If you compiled Mitsuba yourself, you may have to set MITSUBA_PYVER before sourcing ‘setpath.sh’ so that the right paths are set up.

I was using a kind of old arch package and just updated it and tried it again and now no version of python works; I get a ‘Import Error: /usr/lib/libboost_python.so.1.55.0: undefined symbol: PyClass_Type’. I think there’s a problem in the arch package and I’ll try to build it from source to see if it works. Before it would work with Python2 but not Python3.

I built it from source and I can now use Python3. The only thing that I noticed was that MITSUBA_PYVER was set to 3.3 and I’m using 3.4, so that was probably the problem with the arch package that I got off of AUR. Thanks a lot for the help.

what’s the book name of MI , I see many time in the file “sunmodel.h” such as “Results agree with the graph (pg 115, MI) ” ?

You’ll have to ask the authors of the Utah daylight model, since this is literally taken from their source code. I think it was some kind of illumination society book, but I could be wrong. Maybe you can find it by looking through their references.

hi people,i’m here to try to solve an issue experienced using beta 1.0 of mitsuba!the plugin in stand alone works fine,but when i try to render directly from rhino gui,appears an error popup!and mitsuba get stuck in that error…

how can i solve it?

this the error:

http://discourse.mcneel.com/uploads/default/11388/bbbccc84db44729b.JPG

any rhino scene,simple or complex stucks mitsuba

Hi,

are you using the plugin from mitsuba.org or the version from TDM Solutions SL?

This looks very much like your computer is not set to English, and for that reasons something goes wrong in the exporter (which should of course not happen…). If I may ask, what is the language setting on your machine so that I can try to replicate it?

Wenzel

i think from TDM solution! i dont remeber where i found the plugin!

however, the rhino language used is set to Italian

http://discourse.mcneel.com/uploads/default/11404/c72ad1cf9bb466bb.JPG

another issue!this expoter plugin has several problem!

In that case please report these bugs to them — there is nothing I can do here to fix these issues.

Wenzel, could you please provide some usage example of the function TriMesh.fromBlender in python?

Function expects a long type value for the face and vertex pointers which i don’t quite understand.

Wish you could provide some simple code on how to use this.

Thanks in advance.

Hugo

Here is a Python snippet that uses this function to efficiently export all meshes in a Blender scene into a combined .serialized file: https://gist.github.com/wjakob/e00fe4afad83a2447ffe

In the meantime, this function has been improved twice so I can’t guarantee that the snippet still works without minor modifications.

Note that Francesc Juhé has already integrated this and other cool features into his branch of the Mitsuba Blender plugin. It’s still unstable but should be become part of the official branch in the future.

Thanks Wenzel. That was very helpful.

I suppose I would have to write my own fromMax function in 0rder to transfer mesh data directly from 3dsMax.

I was hoping I could use fromBlender to do that but I can’t pass pointers directly from python.

right — the fromBlender() function assumes a specific internal memory layout used in Blender. That will be completely different in 3ds max, plus you will need to find a way to extract the pointers from it.

Yes. Unfortunatly 3dsMax’s python api doesn’t expose any pointers directly. I need to build a custom renderer plugin for 3dsmax to get direct access to geometry data.

I’ll give it a try sometime soon and let you know about my progress on it.

Thank you,

Hugo

Hi,

I’ve just started to work on integrating Mitsuba into Carrara (from Daz3D). It should feature an exporter and also be integrated as a renderer.

Exporting lights, camera, shapes is easy enough and my proof of concept works like a charm.

I’m working on shader now. I will implement specification for all BSDF, subsurface and participating media, but I also need an automatic convertor that will try to get a BSDF as close as possible to the original Carrara shader (based on Lambert shading).

If you have any clue or experience on how to properly implement this, any help will be welcomed

If the POC continues to go well, Il will release it open sourced.

Hi Philemo,

that sounds great! Converting materials is really tricky, and I don’t think there is any perfect way to go about it (different systems follow very different material design principles, and Mitsuba is no exception in that regard).

Is there some webpage where you are tracking your progress so that other people using Daz could follow?

Best,

Wenzel

Hi Wenzel,

I was afraid of that. Carrara is now mainly a hobbyist tool and a plugin won’t be used if you have to respecify all the shading before even thinking of rendering. I’m convinced it’s vital to use Mitsuba bsdf, but allowance must be made for ease of use. I’ve made some experiments and I think the lambertian part of Carrara shader (diffuse and specular) can be emulated easily enough using the Ward plugin.

I will set up a development blog as soon as the proof of concept is set up. I have yet to work on the integration of Mitsuba renderer into Carrara rendering system before completing this part.

Anyway, the more I experiment with Mitsuba, the more I like it. You’ve done a great job and I’m so impressed with it I really want to say it

Hi Wenzel,

The initial POC is not going very well.

First, I had an conflict between Carrara SDK and Mitsuba SDK. Both are using the same typedef (TVectorn, assert…) and Carrara SDK doesn’t have namespace. I solved it by isolating call to each SDK in different libraries and having them communicate through neutral parameters.

Now, I have the Plugin Manager freezing after loading the first plugin. I have the message in the log stating it is loaded and nothing afterwards. If I understood correctly your code, it’s stuck somewhere between the loading and the instancing…

Hi Philemo,

I found this blog while also looking into doing a Carrara integration. Mitsuba looks amazing. I’m glad I read this becuase I would call myself a novice C++ code (but a good web developer). The XML part made me think it would be easy but it sounds like I would be biting off way more than I could chew so I am really happy to hear that you are taking this on. I would be happy to help in whatever way I can.

Hi Charles,

For now, I’m mainly stuck with technical problems. The integration works now, but it’s not stable yet (too many crashes). On that front, I need a experimented C++ developer more than anything else :-(.

But there is other fronts where help would be most welcome :

– alpha testing (probably by the end of January) on a windows platform.

– Experimenting with Mitsuba BSDF and media to improve the translation from Carrara to Mitsuba. You can now export an OBJ or a DAE version of your scene, import it in the Mitsuba GUI and alter the generated XML (it’s what I’ve been doing for some time now). It’s tedious (hence the plugin) but doable. And this is a crucial point for this kind of plugin : allow non experimented user to produce nice render from a shader that already produces nice results with the Carrara renderer, hence reducing the learning curve.

If you want to contact me, here is my (Rot13 encoded) email address : cuvyvccr.puvrhk@zntarenzn.se

Hello, I hope all is well.

I just want to ask.

You may mitsuba render in fire and smoke created in Blender?

Is there any tutorial that can help me?

They are greatly appreciate it, as I am interested to use Mitsuba professional project and I would also like to contribute financially to Mitsuba.

I would like to know your comments, greetings.

Hi, I t is a nice news, I wanted to test the Mitsuba 0.5.0. I made a lot of tests but could not achieve Glass material. I used dieslectric material type. And i think whole Mitsuba community needs tutorial serias about how to use it, at least well-educated wiki page for Mitsuba renderer. I use Blender, and I hope Developers will do it the best integrater to Blender soon. I really do not want to use Cycles in my commercial works, because of noise, Mitsuba can achieve perfect render I think and that ‘s why I need tutorials. Thank you a lot thinking about 3D artists

How can I start writing my own bsdfs/shaders for Mitsuba? Any tips?

You can look at some of the existing BSDFs in Mitsuba :). E.g. ‘diffuse’, which is as simple as it gets.

Thanks Wenzel. Will have a look. Any plans to add any hair scattering models to Mitsuba?

Hi Wenzel, any plans for further development of Mitsuba or is kind of discontinued? What about more “mainstream” features like rendertime displacement?

Thanks, regards.

I gotta tell you. I love Mitsuba. Super fast, and I use it a ton with Sketchup. I know you are a busy fellow, but are there plans in the near future to fully develop the Sketchup export plugin (ie the material editor)?

Thanks again for all of your amazing work. Mitsuba is truly something special!

Hi, there,

I want to know how to render depth map.

Cheers,

L